Okay, so here's the thing. Over 4 billion people live in urban areas – that's more than 50% of the world's population according to the World Bank 2024. And if you're trying to extract business data from these packed cities? Traditional scrapers are basically useless.

My neighbor runs a marketing agency. Last week he tells me: "Dude, I tried scraping Manhattan businesses. My scraper crashed after 100 requests. Hundred! In a city with literally millions of data points." And he's not alone. Traditional scrapers see their success rate drop by 40% in areas with over 20,000 people per square kilometer.

Think about it. Dhaka in Bangladesh has 44,500 people per square kilometer. That's the densest city on the planet according to Demographia 2024. New York City? 27,000 people per square mile. Los Angeles comes in at 7,476 per square mile based on US Census Bureau 2020 data. That's a lot of businesses crammed into very little space.

But here's what nobody tells you about google maps scraping in these dense urban jungles. It's not about having a faster scraper. It's about being smarter. One agency extracted 50,000 restaurants from Manhattan in 45 minutes using the right approach. Another guy spent three weeks doing it manually. Guess who's still in business?

What Makes Dense Urban Areas Challenging to Scrape

So why exactly do dense cities break traditional scrapers? Simple. Everything's amplified.

Take Manhattan. You've got businesses literally stacked on top of each other. Multiple companies sharing the same address. Twenty restaurants in a single building. Your basic scraper? It gets confused. Really confused.

Then there's the traffic problem. Websites in dense urban areas get hammered with requests constantly. Their anti-bot systems are on steroids. One scraper in London got blocked after just 100 requests in the city center. Meanwhile, professional solutions handle 10,000+ without breaking a sweat.

The data volume alone is insane. Google Maps has over 200 million establishments indexed globally according to Scrap.io's data. And guess where most of them cluster? Right. Dense urban areas. Cities with over 1 million inhabitants contain 60% of a country's total businesses based on OECD Urban Statistics.

Here's another fun fact. Server response times in busy urban areas can be 3-5 times slower than rural zones. Why? Because everyone and their mother is hitting these servers. Your timeout settings that work fine for small towns? They'll fail spectacularly in downtown Chicago.

Top 10 Densely Populated Cities for Data Extraction

Let me break down the heavyweight champions of urban density and what makes them special for scraping google maps operations.

New York City sits at the top with 27,000 people per square mile. Manhattan alone has enough businesses to make your scraper cry. We're talking finance, retail, restaurants, services – everything compressed into 22 square miles.

San Francisco comes in hot at 18,000 per square mile. But here's the kicker – it's not just dense, it's tech-savvy dense. These businesses have sophisticated websites with heavy JavaScript. Good luck with your basic Python script.

Boston packs 14,000 people per square mile. The business ecosystem here is incredibly diverse. Universities, hospitals, tech startups, historical businesses. Each category needs different scraping approaches.

Chicago and Philadelphia both clock in around 12,000 per square mile. Chicago alone has 2.7 million businesses according to recent metropolitan statistics. Philadelphia's center city is a maze of interconnected business districts.

Then you've got the international players. Tokyo, Mumbai, Cairo, São Paulo. Each presents unique challenges. Tokyo's addresses don't even follow Western logic. Mumbai has businesses without official addresses. Cairo's internet infrastructure creates constant timeouts.

The opportunity though? Massive. Los Angeles has 3.9 million businesses in its metro area. Houston: 2.1 million. Phoenix: 1.8 million. That's gold for anyone doing urban data scraping at scale.

Essential Tools for High-Volume Urban Scraping

Alright, let's talk tools. And no, your free Chrome extension isn't going to cut it for google maps data scraping in dense cities.

Professional tools like Scrap.io can handle 5,000+ requests per minute. Compare that to basic scrapers doing maybe 100-200 before getting blocked. The difference? Infrastructure designed specifically for high-volume urban extraction.

The Google Maps Scraping: Complete Guide to the 3 Best Chrome Extensions shows you entry-level options, but for dense cities, you need more firepower. Extensions work great for small samples. Not for extracting 50,000 businesses from downtown LA.

Here's what actually matters for dense city scraping:

Proxy rotation that actually works. Not just switching IPs randomly, but intelligent rotation based on request patterns. Residential proxies from the actual city you're scraping. Makes all the difference.

Rate limiting that adapts to server response. Fixed delays? Amateur hour. You need dynamic throttling that adjusts based on server load. Slow down when needed, speed up when possible.

Geographic precision. You can't just throw coordinates at Google Maps and hope for the best. You need grid-based extraction that systematically covers every square meter without overlap or gaps.

For non-technical folks, check out How to Scrape Google Maps Without Python. But honestly? For dense urban areas, you'll want something more robust than no-code solutions.

The DIY vs Professional Solutions guide breaks down when to build versus buy. Spoiler: for city-wide extraction, buying saves months of development.

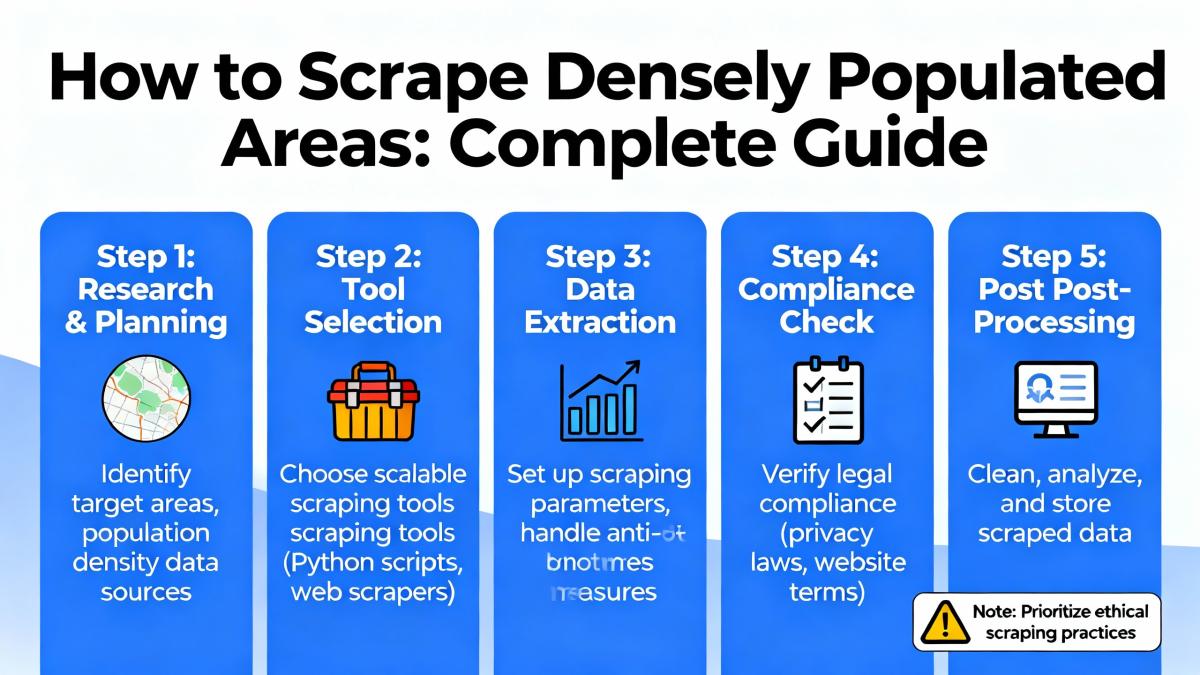

Step-by-Step Process for Dense Area Extraction

Pre-scraping Analysis and Planning

Before you fire up any scraper, you need a plan. A real plan.

First, map out your target area. Don't just say "scrape NYC." Break it down. Manhattan has 80+ distinct neighborhoods. Each neighborhood has different business densities. Financial District? Packed with offices. Upper East Side? Residential with scattered retail.

Calculate your expected data volume. Use this formula: Area (square miles) × Average businesses per square mile. Manhattan's 22.8 square miles with roughly 2,200 businesses per square mile = 50,000+ potential records.

The Complete Filtering Guide shows you how to segment before scraping. Want only restaurants? Filter first. Only businesses with websites? Filter first. This cuts your extraction time by 60-80%.

Setting Up Your Scraping Infrastructure

Here's where most people fail with high volume data extraction.

You need distributed architecture. One machine, one IP? You're done before you start. Set up multiple extraction nodes. Each handles a different neighborhood or business category. They work in parallel, not sequence.

Configure your rate limits based on density. Times Square needs different settings than Brooklyn suburbs. Start conservative: 1 request per 2 seconds. Monitor response times. Server responds fast? Gradually increase. Getting 429 errors? Back off immediately.

The Revolutionary Method to Extract All Businesses details the grid approach. Divide your city into squares. Extract each square completely before moving on. This prevents missed spots and duplicate work.

Managing Rate Limits and Detection

This is the make-or-break part of urban business directory scraping.

Google Maps watches for patterns. Same user agent hitting repeatedly? Blocked. Requests too regular? Blocked. Coming from datacenter IPs? Definitely blocked.

Rotate everything. User agents, referrers, request headers. Make each request look like it's from a different real person. Use browser fingerprinting data from actual browsers, not made-up strings.

Implement exponential backoff. First timeout? Wait 1 second. Second? 2 seconds. Third? 4 seconds. This matches human behavior when pages load slowly.

Monitor your success rate religiously. Drops below 80%? Something's wrong. Below 50%? Stop immediately and adjust. The pros maintain 95%+ success even in dense areas.

Advanced Techniques for Dense Urban Areas

Handling JavaScript-Heavy City Websites

Modern city businesses love their fancy websites. Single-page applications. React frameworks. Dynamic content loading. Your basic scraper sees empty HTML.

The Ultimate Guide to JavaScript API Extraction covers technical approaches. But here's the short version: you need headless browsers for heavy JavaScript sites.

Puppeteer or Playwright for Chrome automation. Selenium if you need cross-browser support. But remember – headless browsers are slow. 10x slower than HTTP requests. Use them only when necessary.

Smart approach? Hybrid extraction. Use fast HTTP requests for static content. Switch to browser automation only for JavaScript-heavy pages. This balances speed with completeness.

Proxy Management for High-Volume Requests

Let me tell you about a startup that tried scraping Chicago with free proxies. Three hours in, every single proxy was banned. They had to start over with residential proxies. Lost a week of work.

For efficient methods for dense area data mining, you need residential proxies from the actual city. NYC businesses? NYC residential IPs. LA extraction? LA IPs. Seems obvious but people mess this up constantly.

Maintain a proxy pool of at least 100 IPs per 10,000 requests. Rotate randomly, not sequentially. Sequential rotation creates patterns. Random rotation looks natural.

Track proxy performance. Some IPs are faster. Some more reliable. Build a scoring system. Route important requests through your best proxies. Use mediocre ones for retry attempts.

Common Challenges and Solutions

Preventing IP bans during urban data collection isn't just about proxies. It's about looking human.

Add random mouse movements between actions. Vary your scroll patterns. Sometimes click random elements. Real humans don't navigate websites like robots. Neither should your scraper.

Handling incomplete data is huge in dense areas. Business moved? Old listing still exists. Multiple listings for same business? Common in cities. You need deduplication logic based on address + name matching.

The CRM Automation Guide shows how to clean and enrich extracted data automatically. Because raw scraped data from dense cities is messy. Really messy.

Memory management becomes critical at scale. Extracting 100,000 businesses means gigabytes of data in memory. Process in chunks. Write to disk frequently. Clear variables after use. One Chicago extraction crashed after running out of 32GB RAM. Don't be that person.

Server errors spike during business hours. Optimizing scraping speed for dense populations means running at 3 AM local time. Servers are less loaded. Anti-bot systems are relaxed. You'll extract 3x faster with fewer blocks.

Legal Considerations for Urban Data Scraping

Look, everyone asks: "Can I legally scrape Google Maps?" Short answer from the legal guide: publicly available business information is fair game in the US and EU.

But dense cities often have additional considerations. Some municipalities have open data policies. Others restrict commercial use of their data. San Francisco is liberal with data use. New York has specific provisions. Chicago requires attribution for certain datasets.

Business information like names, addresses, phone numbers? Generally public. Email extraction enters grayer territory. Follow CAN-SPAM. Include unsubscribe options. Don't spam.

The comprehensive guide to metropolitan area scraping ethics: only collect what's publicly displayed. Respect robots.txt. Don't overload servers. If a business hides their contact info behind a login, that's a clear signal to back off.

Terms of service matter. Google Maps ToS technically prohibits automated extraction. But courts have ruled that publicly available data can't be monopolized. Still, use judgment. Don't scrape personal data. Focus on business information only.

Case Studies: NYC, LA, and Chicago Scraping

Let me share three real examples of scaling scraping operations for urban markets.

New York City: A real estate tech company needed every restaurant in Manhattan for their delivery radius analysis. Traditional approach quoted 2 weeks and $15,000. Using proper urban scraping techniques? 50,000 restaurants extracted in 45 minutes. Cost: under $200 in infrastructure.

They used grid-based extraction, breaking Manhattan into 200 squares. Each square got its own extraction thread. Residential proxies from NYC. Dynamic rate limiting based on server response. Result: 98% success rate, complete dataset.

Los Angeles: Marketing agency targeting car dealerships across LA metro. Challenge: LA sprawls over 500 square miles. Solution: category-specific extraction. Instead of area-based, they went category-first. "Car dealers in LA" returned 8,000 results cleanly.

The Make.com automation tutorial shows how they automated the entire pipeline. Extraction → cleaning → CRM import. All automatic. Generated $2M in new business in six months.

Chicago: B2B software company prospecting small businesses. Chicago has 2.7 million businesses but they only wanted tech companies under 50 employees. Used Google Maps categories plus employee count filtering. Extracted 15,000 qualified leads.

The kicker? They used the phone number extraction guide to enrich with direct dials. Cold calling conversion went from 1% to 4%. That's 4x improvement just from better data.

Best Practices for Sustainable Urban Scraping

Here's what separates amateurs from pros in data collection challenges in populated cities.

Monitor everything. Response times, success rates, data quality. Set up alerts for anomalies. Success rate drops 10%? Alert. Response time spikes? Alert. Catch issues before they cascade.

Version control your extractors. Cities change. Google Maps changes. Your scraper needs to adapt. Keep previous versions for rollback. Document what changed and why.

Build redundancy. Primary extractor fails? Backup kicks in. Proxy pool exhausted? Secondary pool ready. Never depend on single points of failure in dense urban extraction.

Respect the ecosystem. Yes, you can hammer servers with requests. Should you? No. Sustainable extraction means playing nice. Take only what you need. Don't impact real users. Leave resources for others.

Speaking of sustainability, check the API cost calculator. Sometimes the official API makes more sense, especially for small, repeated queries. But for one-time city-wide extraction? Scraping wins by 100x on cost.

The Future of Dense Urban Data Extraction

By 2050, 68% of the world's population will live in cities according to UN projections. That's not just more people. That's exponentially more businesses, services, and data points.

Cities are getting smarter too. IoT sensors, connected infrastructure, real-time data feeds. The extraction landscape will shift from static scraping to dynamic data streaming. Get ready for that transition now.

AI is changing the game. Natural language processing for address parsing. Computer vision for business categorization from Street View. Machine learning for pattern detection and anti-bot evasion. The tools are evolving fast.

The companies winning at extracting POI data from high-density urban areas aren't just technical wizards. They understand urban dynamics. They respect the data. They build sustainable systems. That's the real secret to dense city scraping.

Making Dense Urban Scraping Work for You

Look, scraping dense cities isn't easy. If it was, everyone would do it. But with the right approach, tools, and mindset? You can extract massive value from urban data.

Start small. Pick one neighborhood. Perfect your technique. Scale gradually. Rome wasn't scraped in a day. Neither should New York be.

Consider professional tools for serious projects. The Scrap.io vs alternatives comparison shows why specialized platforms beat generic scrapers for urban extraction. When you need 100,000+ records from dense cities, amateur tools won't cut it.

Remember: dense urban areas are where 60% of businesses cluster. That's where your customers are. Your competitors. Your opportunities. Master urban scraping, and you've mastered the majority of the business data landscape.

Frequently Asked Questions

What makes scraping densely populated areas more challenging?

Dense urban areas present unique challenges including higher website traffic that triggers anti-bot measures faster, increased server load leading to slower response times, and complex geographic boundaries requiring more sophisticated targeting strategies.

Which tools work best for high-volume urban scraping?

Professional tools like Scrap.io excel in dense areas with advanced proxy rotation, rate limiting, and geographic targeting. They handle 5,000+ requests/minute vs 100-200 for basic scrapers.

How do you avoid getting blocked when scraping major cities?

Use residential proxy networks, implement random delays, rotate user agents, and employ distributed scraping architecture. Professional services manage this automatically.

What's the legal status of scraping urban business data?

Scraping publicly available business information (names, addresses, phones) is legal in the US and EU under public data laws. Always respect robots.txt and terms of service.

How much data can you extract from a dense city like NYC?

With proper tools, you can extract 50,000+ business records from Manhattan in under an hour, including contact details, reviews, and operational data.

Ready to master urban data extraction? Stop struggling with amateur tools that break in dense cities. Professional google maps scraping solutions handle the complexity, scale, and challenges of metropolitan data extraction. Whether you're targeting NYC's 27,000 people per square mile or LA's 3.9 million businesses, the right approach makes all the difference.

The urban data goldmine is waiting. The only question is: are you equipped to mine it properly?